Published 2nd Mar, 2019

Let's consider a thought experiment. Imagine you are sitting in a classroom and the professor shows you a small yellow pouch. The pouch is rather plain but then he spices it up. He tells you there is a chance that there is something valuable inside it, but there's also a possibility there is nothing. He offers the entire class — you and your fellow classmates, a chance to buy it off of him. If you were playing this game and you had to pay real money for the bag. What would you do? What would be a sum you'd be comfortable paying for this pouch?

Do you have an answer? Good.

Let's spice this up some more. Imagine the professor is a Harvard Alumni and is now teaching a course on introductory finance at MIT. Do you want to change your initial bid in the face of this new information? Yes? No?

How about we take it one step further. Imagine your fellow classmate, someone you know who has already taken this course earlier suddenly bids Rs. 1000 out of the blue? What would you do then? Would you bid higher as this new development unfolds?

This was a case study I borrowed from a course on Edex where a professor runs this experiment at a live setting. In his case, a girl, who happened to have previously taken his course wins the bag after a few rounds of bidding taking the price to about $80. She then ends up finding out that the bag contained an Apple Watch, a product retailing for about $280. Considering the girl had already taken the course it's likely she knew something about the professor that nobody else did. It's also possible other students were making their own calculations. Maybe someone pegged that a tenured professor from Harvard would never put an empty bag in front of his students. It's possible someone else was simply reacting to a competitors bid or maybe a combination of these forces pushed the price to $80. Although the actual value of the bag was considerably higher than the price attributed by the market, the professor argues that the wisdom of the crowds, in this case, helped in deciding the price based on all the information available. But is the collective wisdom of the crowds always rational?

Consider the bidding had gone up to $10,000. Imagine college going students paying that kind of money for a bag whose contents are unknown. Unless they are all related to Bill Gates, it's unlikely they should be bidding anywhere close to that sum. This is because a rational investor makes his decision based on a prudent analysis of the risks and rewards associated with the game. Irrespective of what's in the bag, a rational investor would have bid a sum that's insignificant commensurate to his net worth because of the paucity of information available. If a college student was bidding $5, it would probably make sense, but by bidding $10,000 a student would have violated the basic covenant of prioritising risk mitigation over maximising rewards. But what if the bidding involved a bunch of billionaire Hedge Fund Managers. Would a $10,000 bid be justified then? No, because it's highly unlikely a professor would be giving away a $10,000 bag in an experiment. Even if you are worth a billion dollars a rational decision must be premised on the maximum reward you can potentially reap from the game. However, more often than not investors forego this age-old maxim in the pursuit of extravagant riches. The wisdom of crowds can quickly degenerate into the madness of mobs when rational investors come face to face with their most formidable nemesis— their mind.

Although most people like to believe that Irrational exuberance is a product of big bang events, it's not. Instead, it's a by-product of systemic bias creeping into the minds of individual investors. This bias can spread by way of a psychological contagion pretty quickly if the right conditions are met. Consider for example the story we covered last week, on the real estate bubble. We received a barrage of comments from a whole lot of exuberant readers — both from well-wishers and critics alike. While we are extremely grateful to everyone that wrote back to us, we did observe a rather interesting pattern. The fiercest critics were almost always people who were either directly or indirectly financially invested in Real Estate. This anomaly, however, is to be expected. Once you build an investment hypothesis you are more likely to search, interpret, recall or favour information in a way that confirms your pre-existing belief. Behavioural psychologists call this — confirmation bias and because our article did not lend credence to the critics' investment hypothesis they were more inclined to pick holes in our theory. This sort of thinking can often have devastating consequences because it gives investors a false sense of infallibility. It prevents them from thinking clearly and adequately assessing risks associated with their investment. To better understand the source of this psychological discomfort let's look at the arguments themselves.

Most people who were extremely critical about our story had one main contention, that our analysis was limited to a Macroanalysis of the Real Estate sector when in fact a more prudent analysis would have involved an exposition of individual micro markets. The argument was this — Real Estate markets don't behave like one big correlated entity i.e. Home prices in Delhi could be falling whilst prices in Mumbai could be on a perpetual rise. If you're living in Mumbai, Real Estate investments would still seem like a pretty good bet and so the argument was that any nationwide analysis is useless because markets in Mumbai, Bengaluru Delhi etc. all behave differently. While this seems like a pretty reasonable argument it's one of the many logical fallacies that undermine the process of seeking a more resolute understanding of the subject.

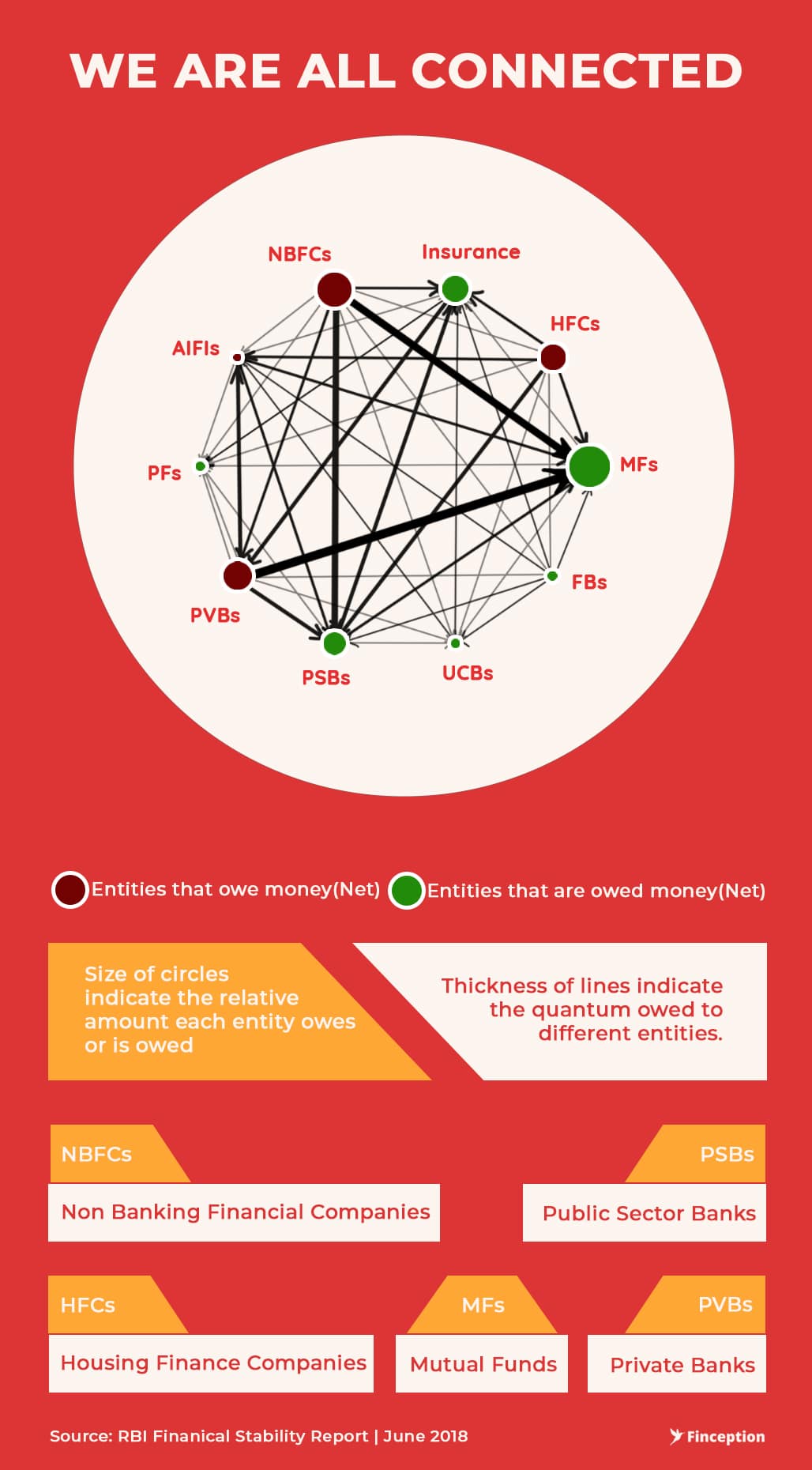

In this particular case, the investor reduces the argument to a simple dichotomy i.e. either you do a micro-market analysis or you're wrong. This line of reasoning fails by limiting the options to two when there are in fact more options to choose from. The Real Estate Industry is connected to the economy in more ways than one. Imagine a developer operating in both Delhi and Bengaluru at the same time. If the builder experiences stress in Delhi it's likely to spill over to his assets in Bengaluru as well. This could be further compounded by a funding crunch rising out of a major weakness in the banking industry, in which case the micro markets no longer behave as if they are uncorrelated. Instead, they exhibit high levels of correlation which is why we see housing bubbles emanating at the macro level affect multiple prongs of a country's economic system.

An Example of the Interconnectedness of the Banking system

This is in no way a suggestion that any micro market analysis is futile. A more nuanced analysis is almost always premised on smaller markets operating in different cities. It's also quite possible that the Real Estate markets could correct itself without ever breaking down completely. Banks could figure out a way to wriggle out of a funding crunch without ever suffering losses and the Industry could have multiple exit options. But the larger point here is that plurality of ideas helps stave off bias. Instead of simply sticking to just two options, a gamut of ideas can help investors better understand the sector and further refine their hypothesis. Behavioural psychologists have a name for such people — Foxes.

'The fox knows many things, but the hedgehog knows one big thing.' — Greek poet Archilochus

In his seminal essay on political forecasting, Expert Political Judgment (2006), Philip Tetlock dissects the difference between two personality types — Hedgehogs and Foxes. According to him, many political pundits are hedgehogs or people who are psychologically biased to be specialists. These people often make bombastic claims about future events and characterise them using definite narrow outcomes without considering the possibility of other sideline events affecting these outcomes. This is how a hedgehog prediction would look like — The North Korean crisis will end when Trump is elected to office. Foxes, on the other hand, are more conservative with their predictions. These are people who seek to know a little about a lot of different things. They often borrow from a range of ideas and form a more nuanced opinion that is qualified by multiple if's and but's, something that looks like this — "The North Korean Crisis could see some kind of closure if there is a deal between the two countries over the course of the next two years. However, it is unlikely to materialise when both leaders are antagonistic in nature."

In a bid to critically analyze the efficacy of predictions from both sides, Tetlock studied the reliability of their predictions over a period of 18 years to see who had a better chance at success. He analyzed the predictions of over 100 experts and generally found that the more confident the experts (hedgehogs) were about their predictions, the less accurate they were compared to random guessing. Tetlock's research showed that self-proclaimed experts who are overly confident about their predictions are often less reliable in terms of predicting what will happen in the future, even though they might look and sound good. On the other hand, those who were more comfortable questioning and synthesizing multiple choices and stuck to a broad range of outcomes had a visible advantage. The bottom line is — An expert who offers you a long winding explanation and bores you with an array of "however's", is probably right about what's going to happen.

Point of Interest — Ask yourself what personality type are you. Be honest now :)

In addition to this, he also made another observation. When these experts were confronted with evidence of their failed prediction they would immediately attribute the cause of failure to external factors. Instead of saying, "I had the wrong theory," the experts declare, "It almost went my way," or "It was the right mistake to make under the circumstances," or "I'll be proved right later." In an attempt to reduce the psychological discomfort (cognitive dissonance) of holding contradictory beliefs, people often choose to rationalise their prediction using flimsy accounts of the past. The rational man quickly devolves into the Rationalising man.

Take for example the classic case of cognitive dissonance described in the novel, When Prophecy Fails. A doomsday cult leader once made a prediction that the world would end for good when aliens start invading the planet. However, despite waiting for a considerable time, the doomsday prophecy failed to materialize. This would have been the ideal condition for the cult's followers to reevaluate their hypothesis and abandon the group and the leader altogether. But that did not happen. Instead, the members of the cult rationalised that the world had been saved by the strength of their faith and continued to trudge along. It's never a good idea to rationalise bad decisions and investors would find this out the hard way when the global economy failed in almost catastrophic fashion in 2008.

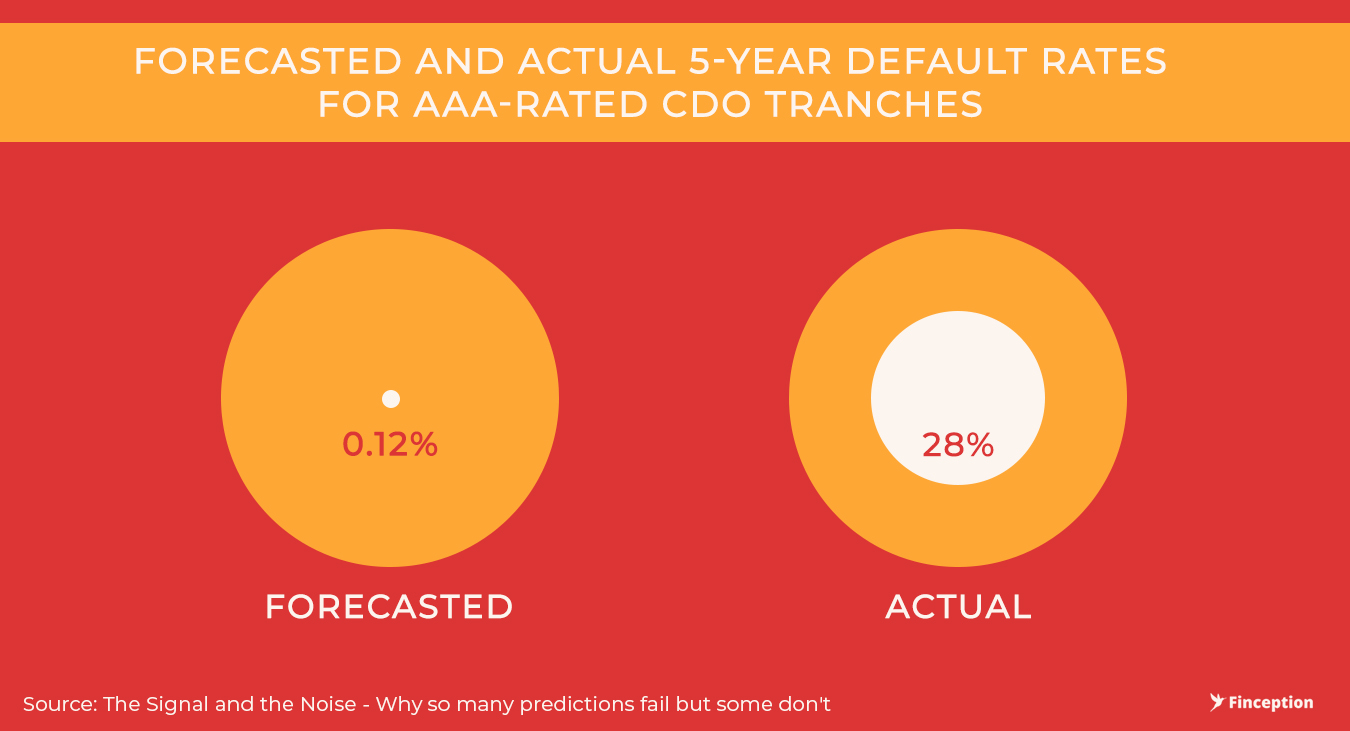

In his bestselling novel "The Signal and the Noise", Nate Silver carefully lays out an exposition of the greatest predictive blunder that ultimately gave way to the financial crisis of 2008. According to him, one of the primary culprits that were never fully brought to book was the rating agencies. The rating agencies were responsible for assessing the likelihood of trillions of dollars in mortgage-backed securities going into default. A simple case of a mortgage-backed security is a house loan and the likelihood of a salaried well-to-do homeowner defaulting on his payment is almost negligible. But greedy brokers backed by wall street capitalists found a way to corrupt the system, in that they started disbursing home loans to increasingly shady (also gullible) people — people with no jobs or no steady income, in the hope of making an extra buck. To make it appealing to investors they put the good loans and the not-so-good loans together in a special type of security called — "A Collateralized Debt Obligation" or a CDO. The hope was that once the mixing was done well enough, the diversification would protect the whole thing from going bust i.e. even if some homeowners default, the good homeowners would ensure that the CDO survives. Rating agencies whose income largely came from these investment banks were in full agreement and gladly rated these CDOs AAA — the gold standard for any asset. Standard & Poor's, one of the largest rating agencies told investors, for instance, that when it rated a particularly CDO at AAA, there was only a 0.12 % probability — about 1 chance in 850 — that it would fail to pay out over the next five years.

By the end of it all, however, around 28 per cent of the AAA-rated CDOs went bust. This was an error of epic proportions and it all began with a systemic bias creeping in at the top level. For one, the rating agencies, including some new age economists were beginning to think that the wisdom of the crowds had finally matured to a point where catastrophes were relegated to becoming relics of the past. When someone would point out anything to the contrary they would immediately be sidelined under the pretext that they were some sort of doomsday conspiracy theorists. When Raghuram Rajan presented a paper on the risks associated with CDOs and other complex financial instruments he came under persistent attack for being an anti-Luddite or a person who resists change.

There was another popular belief within the financial community — that the Real Estate markets were not highly correlated. As Nate Silver points out, the belief was that if a carpenter in Cleveland defaults on his mortgage [House Loan], it should have no bearing on whether a dentist in Denver does. Under this scenario, the risk of losing your bet would be exceptionally small because a CDO as we have already noted is an amalgamation of several housing loans pooled into one happy entity. However, by 2007, home prices were declining across the board and yet the rating agencies were selectively cherrypicking information to show that the AAA-rated CDOs were good. Instead of taking stock of the events and rerating these instruments (considering they had no prior experience rating these things) they stuck to their guns. Finally, as the housing crisis peaked and homeowners started defaulting on their payments it was finally beginning to seem as if the carpenter in Cleveland was defaulting for the same reasons the Dentist in Denver was defaulting (higher interests as home prices started tumbling). The rating agencies did try and make some adjustments to their complicated rating models but all of the changes were woefully inadequate. By the time the dust settled, the U.S economy was on the verge of collapse.

When heads of these rating agencies found themselves in the hot seat they immediately shirked responsibility and claimed to have been unlucky. They rationalised their behaviour by blaming an external contingency — housing prices. Looking back we know for a fact that this is not true. The rating agencies were aware that prices were on the decline and in some cases were actively predicting the decline but they were so sure of their models that they did not want to change their original hypothesis. Confirmation bias had given way to denial. The denial further emboldened other frenzied investors. The mad mob baying for everlasting riches found a way to precipitate a crisis like no other and when the party ended, the community rationalised their behaviour suggesting that there was no way anyone could see it coming.

Perhaps the only way then, to protect yourself from this ill of self-confirming theories and belief systems is to adopt the idea of falsification. Investors should perhaps actively seek to falsify themselves in a bid to better understand their own hypothesis. If you find evidence that contradicts your beliefs don't scoff at it, instead embrace it and see if you need to abandon or modify some of your assumptions. Scepticism in the game of investing can go a long way in protecting your wealth and most investors would agree that it is much easier to be rational when your capital is intact.

We have relied on excerpts from “Adaptive Markets: Financial Evolution at the Speed of Thought” by Andrew Lo and Nate Silver’s “The Signal and the Noise: Why So Many Predictions Fail-but Some Don’t”, to put this story together

. . .

Enjoyed reading? Show us your love by sharing...

Tweet this articleReview & Analysis by Pawan, IIM Ahmedabad

Liked what you just read? Get all our articles delivered straight to you.

Subscribe to our alertsDisclaimer: No content on this website should be construed to be investment advice. You should consult a qualified financial advisor prior to making any actual investment or trading decisions. The author accepts no liability for any actual investments based on this article.

READ NEXT

Get our latest content delivered straight to your inbox or WhatsApp or Telegram!

Subscribe to our alerts